Potential Problems

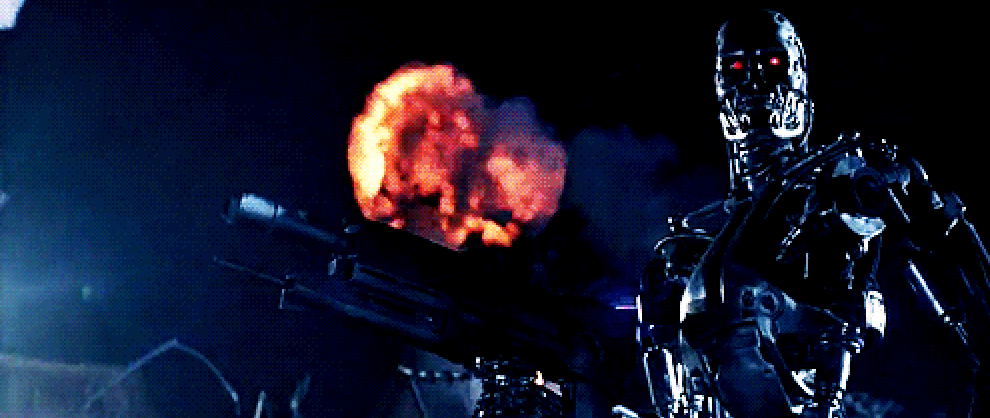

AI remains somewhat controversial within the science world, sparking debates on the potential and possibly dangerous outcomes which could arise from AI. High profile members of the science community, such as Elon Musk and Steven Hawking, warned against the dangers of AI, stating in an open letter, “Because of the great potential of AI, it is important to research how to reap its benefits while avoiding potential pitfalls” (Live Science). Among these concerns, the destruction of humanity, lack of control over technology, and unethical usage of AI in warfare are listed. In order to placate the unease, AI enthusiasts offered suggestions like the United Nations implementing a ban on the development of AI to harm humans and creating a general set of guidelines which AI developers must follow (Toronto Life). Additionally, a vocal movement pushed for AI developers to reject offers from Communications Security Establishments and similar institutions, given the goals of national security could potentially result in an abuse of AI to kill humans in warfare.

Will AI replace the need for human labor? There is a 78% feasibility that predictable physical work, such as welding, soldering on an assembly line, food preparation, and packaging will eventually become automated. How will the industrial world handle the loss of employment? Hopefully, intellectual careers will open up and replace the physical work taken over by machines. However, the risk of unemployment is a frightening concern. As machines take over jobs once done by a human being, will humanity itself begin to decline? For better or for worse, people of the future will be spending more and more time interacting with machines as opposed to humans. Video game and cell phone addiction are already stealing human attention away from the rest of humanity. Will we begin to neglect the need to connect with others around us? Some people may see the future of AI as a depressing and unfulfilling era of disconnect and isolation.

Machines are not perfect. Error is inevitable. For example, when a self-driving car hit a woman in Arizona, operations were shut down as people responded with distrust and fear. As machines begin to take control of more and more operations, humans are left at the whim of a machine. Machines cannot be rationalized with, and for many people, they are extremely complex and impossible to understand. So how much trust can people put in something that they do not understand? The rise of AI may increase stress and fear among the public. With more and more knowledge being poured into AI, there are always rumors of robots taking over the world or killing off their human masters. The future trends will entrust computers with even more power. However, since computers are unable to put situations in the context of humanity, the solutions and actions of a computer may not always be what the user desires.

Could AI be used for war? Robbery? With great technology also comes great risks. Regulation of AI and the tasks that AI can be used for will become an ever-hotter debate as AI development continues. Security will become threatened as AI makes tracking and hacking even easier to accomplish. Standard computer protection systems will be no match for the power of AI. AI advancements also raise the question of the treatment of AI itself. When will AI be considered something that has unalienable rights? For example, “feeling robots” are designed to have emotions, just like a human or animal. Do “feeling robots” cross the wire from machine to living? The concern for human and AI safety is a pressing issue as companies such as Next Touch press forward into new AI development.